A team of students participating in Cornell University's Tech Challenge program has developed a machine learning application that attempts to break the final frontier in language processing—identifying sarcasm. This could change everything… maybe.

TrueRatr, a collaboration between Cornell Tech and Bloomberg, is intended to screen out sarcasm in product reviews. But the technology has been open sourced (and posted to GitHub) so that others can modify it to deal with other types of text-based eye-rolling.

Christopher Hong of Bloomberg acted as mentor to the interdisciplinary student team behind TrueRatr (consisting of MBA candidates, engineering, and design graduate students)—Mengjue Wang, Ming Chen, Hesed Kim, Brendan Ritter, Shreyas Kulkarni, and Karan Bir. Hong had researched sarcasm detection himself while working on his 2014 master's thesis. "Everyone uses sarcasm at some point," Hong told Ars. "Most of the time, there's some intent of harm, but sometimes it's the opposite. It’s kind of part of our nature."

So it should be really easy for software to detect sarcasm… not. The problem has been that "the definition of sarcasm is not so specific," Hong explained. Past efforts to catch sarcasm have used techniques like watching for cue words ("yeah, right"), or the use of punctuation, such as ellipses. But in his research, Hong looked at what he calls "sentiment shift"—the use of both positive and negative words in the same phrase.

Hong explained the concept using the example sentence, "I love getting yelled at"—"'I love', which is a positive sentiment, and then 'getting yelled at' is a negative statement—that in itself would indicate some sort of sarcasm."

Using that sort of sentiment analysis, Hong was able to train a system to the point where it had an F1 precision score (the number of accurate detections relative to the number of both true and false positives) at a document level (for a whole passage, rather than individual sentences) of 71 percent for his test set. That's better than a coin-flip, at least. But it was based on a fairly small "corpus" of sarcasm—only using a total of 50 random sarcastic and 50 random non-sarcastic Amazon reviews as his test set. So there was no way to know how well the technique would work in the real world.

To build a better snark trap, the Cornell Tech team launched the "Open Sarcasm Project"—an effort to crowdsource the collection of sarcastic product reviews. This totally worked… too slowly for the three months the team had to complete the project. Elena Filatova, now teaching at Fordham University, provided a batch of 437 "high quality" sarcastic and non-sarcastic Amazon reviews she used in her doctoral research at Columbia. The students turned to Amazon's Mechanical Turk paid crowdsourcing service to obtain 158 more sarcastic reviews and collected 99 sarcastic reviews and 257 non-sarcastic reviews on their own, achieving a total training set of 1,188. On a test sample of 100 sarcastic and 100 non-sarcastic Amazon reviews, the TrueRatr system—based on a "random forest" decision tree algorithm rather than the model originally used by Hong—scored slightly better than Hong's original, achieving a 75 percent precision score.

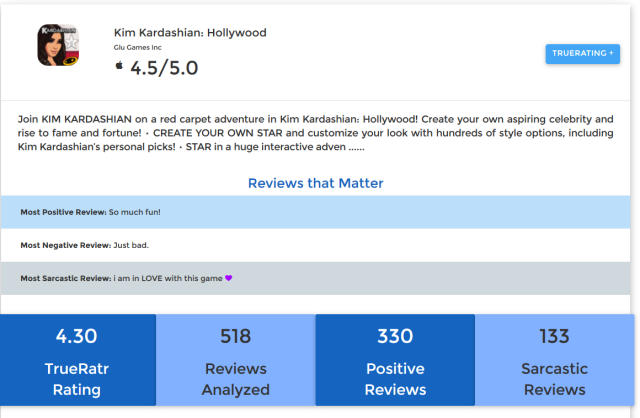

To make the best use of that sensitivity to sarcasm, the Cornell Tech students crafted TrueRatr into something useful to consumers: a tool for filtering out the distortion to the rating of Mac OS X and iOS applications. The TrueRatr site performs analysis of the reviews posted on the Apple App Stores and eliminates the reviews that it determines to be sarcastic, adjusting the overall score accordingly. By clicking on an application's listing, a user can get an analysis of what its "true" rating is—and also find the most sarcastic review. Sometimes, that's a plus for the app in question—removing sarcastic reviews from Uber's app raises the transportation app's overall score from 3.5 to nearly 4 out of 5 stars. On the other hand, Grand Theft Auto: Chinatown had its score drop under TrueRatr's gaze from 4.5 to 3.9 out of 5 stars.

A random sampling of TrueRatr's results leaves some room for doubt about how helpful it is to screen out sarcasm in reviews. In fact, for some applications—as with Snapchat, for example—the sarcastic reviews outnumber the positive ones, and screening them out raises Snapchat's rating from 3.0 to 3.82. And it's possible that some of the sarcastic reviews are merely… dumb ones, such as this one rated as most sarcastic: "I REALLY FVCKING LOVE THIS APP BUT THE CAMERA OF THE LENS IS NOT WORKING ON MY SNAPCHAT!! PLEASE FIX IT!! THANKS!!"

By opening up TrueRatr as open source, the students hope to get more people to test larger samples of text against the algorithm—and hopefully improve its performance even further over time. Bloomberg doesn't currently have plans to use the tool internally, Hong said. That's probably because no one ever uses sarcasm when they're writing the news…